Lately I’ve been recommending RAID systems to most of my clients (of course, depending on their needs) in different flavours (some just need speed, some just need peace of mind and some are just greedy and want both).

Unfortunately, explaining every single time WHY can be somewhat tedious as it’s not as easy as explaining why the latest fantastic 4 move is awful in every possible way and I wish the cinema had some sort of refunding policy similar to what Steam has (if you don’t know what Steam is, it’s a digital distribution platform that sells you games. Yes, I do play games from time to time, I’m guilty).

So, I thought it’d be a good moment to explain what RAID Systems are and why on earth you should care, because after all, don’t we want to use the full potential of the PC we paid for?

RAID, What is it?

RAID Systems (Redundant Array of Independent Disks) is a data storage virtualization technology that combines multiple disks (Magnetic [HDD] or Solid State [SSD] in one single logical unit with the purpose of data redundancy (security), an increase of transfer rate (speed) or in certain types of RAID, both.

How does this sorcery work?

Data is distributed along several drives in different ways which are denominated as RAID levels (nothing to do with what level your Pokemon or World of Warcraft character is though) followed by a number (for example, RAID 0 or RAID 1). Each level provides a balances of pros and cons depending on our needs. All levels above RAID 0 (not included) provide a level of data redundancy (in lamest terms, keep your data safe from hard drive failure) against non-recoverable segments and read errors including complete hard drive failure. This aside, there’s three types of obtaining RAID; “Hardware RAID”, “Software RAID” and “Firmware RAID”.

A brief history lesson:

The “RAID” term was invented by David Patterson, Garth A. Gibson and Randy Katz in 1987 at Berkeley University, California. The concept, however, has existed (or at least, in part) since 1977. In 1983, the tech company DEC started to sell RA8X disk systems (now known as RAID 1) and in 1986 IBM registered a patent which would later turn in its modern day equivalent RAID 5. As the technology became a standard in the industry, the word represented by the “i” in the RAID acronym went from meaning “inexpensive” which was a marketing strategy at the time considering hardware prices to “independent” which is a more accurate representation of the technology itself anyway.

Until a few years ago, RAID was most an industry exclusive and in itself, pretty much a standard, it was unheard of to not set some sort of RAID system when it came to industry data as loosing data there wasn’t exactly the same as loosing a few photos your parents took of you as a kid (which in retrospective, considering the general weirdness of those, might not be a bad thing, and therefore not a great example) but it was uncommon in consumer grade hardware due to it being incredibly expensive and therefore not affordable by the average family. Today, however, where SATA is the standard and IDE has disappeared, RAID has become a technology where, at a basic level, it’s cheap and easy to manufacture which means most motherboards included it at one level or another.

What “levels” can I use?

I’m going to explain what levels we can generally find in today’s consumer market motherboards without much technicism in order to make it as simple as possible so you can decide which to choose if and when you do so and discarding other levels that while exist, are generally reserved for enterprise grade systems or servers. If you’re curious however, here are all the modern-day RAID system levels: Conventional: 0, 1, 2, 3, 4, 5, 5E, 6E. Hybrid: 0+1, 1+0 (o 10), 30, 50, 100, 10+1. Propriety: 50EE, Double Parity, 1.5, 7, S (or Parity RAID), Matrix (no, not like the film), Linux MD RAID 10, IBM ServeRAID 1E, Z.

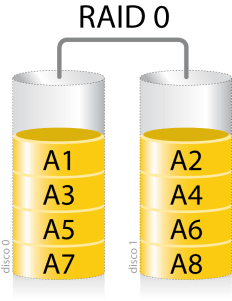

RAID 0 uses 2 or more drivers (any amount above 2 works) in “stripe”. Regardless of what operating system we use (any version of windows, linux, etc), it will detect these drivers in RAID 0 as one single drive. Its size is the result of the sum of the sizes of all drives we chose to add to RAID 0 and a considerable (if not huge) speed increase. The formula to calculate it is the following:

“Speed of the slowest drive” x “amount of drives” – “5% of the total”.

Unlike every other RAID level, RAID 0 has no redundancy or security which unfortunately means that before you think of adding 4 SSDs in RAID 0 for a 2Gb per second read rate, you should know that if one of the drives dies, you lose the information in that drive and unless we have some very specific equipment and tools, we essentially lose the information in the other drives within that RAID 0 array. This is because being a single logical volume, it does not stop and think where to store each bit which means that every drive within the array has a huge chance of not having one single complete file within in. This in turn is what makes it so fast but needs compromise so I do not recommend, not matter how tempting it may be, using more than 2 drives in a RAID 0 array and use it for something that benefits of the speed but does not keep critical files (so for example, the operating system, worst case scenario you can just reinstall it).

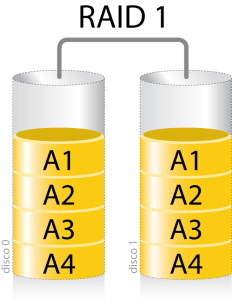

RAID 1 is a basic redundancy level. It requires 2 or more drives but it always has to be in pairs (2, 4, 6, 8, 10, etc). By using RAID 1 we lose the ability to use one out of every two drives. Our operating system will see just one Logical drive (just like on RAID 0) that is the size of the smallest drive within the array and is as fast as the slowest drive within the array.

Each written bit is simultaneously written to all disks within the RAID 1 array. Because of this if one of the drives suffers from read errors or has corrupted sectors or even just plain dies our system will not be affected, it will just let us know that one of the drives needs replacing as soon as possible but Windows or whatever OS you’re running will continue to work, thus preventing data and time loss.

So basically RAID 1 protects us from data loss caused by drive errors (not caused by human error, if you delete a file, that file is gone, obviously) at the expense of loosing access to half the drives that take part in the array. A RAID 1 array can survive the sudden death of up to half its drives, at which point we simply replace the dead drives and resync the remaining drives with the new drives. Today, the whole process can be done without even turning off our PC thanks to the “hot plug” technology (as long as we have it active within our bios/uefi) which is available in almost all motherboards that have SATA connections and is commonly used in basic servers.

As with RAID 0, it’s convenient to use drives that have the same speed due to the fact that the overall speed will be that of the slowest drive (the difference being that in RAID 0 we can experience speed fluctuations depending on what drive is in use at that very second but in RAID 1 the speed is consistent due to all drives being used exactly at the same time all the time and therefore having to stick to the speed of the slowest drive) so it would be wasteful to use fast and slow drives in the same RAID 1 array. We should also use drives of the same size as if we use one 500Gb and one 1000Gb drive, the resulting RAID logical drive would only be 500Gb so we’d be wasting space.

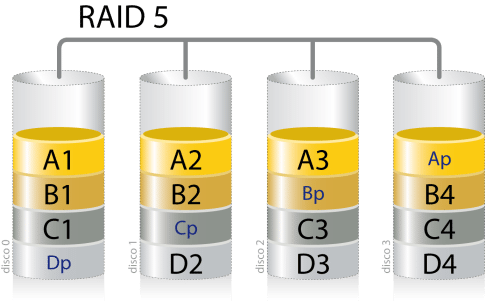

RAID 5 is a level which we’re not going to talk much about because while it is available in consumer motherboards it just isn’t something I’d (or most people would) recommend due to it have a high write cost and so reducing the life expectancy of our drives considerably. It’s original intention is to expand the functionality of RAID 1 with a lower cost in drives (not at a monetary level but rather, at a unit and usable space level).

RAID 5 requires a minimum of 3 drives. As with RAID 1, it is a redundancy level but unlike RAID 1, we do not lose 50% of our storage, we only loose 33%. The mirror information, unlike RAID 1 being direct, is kept in all drives, which is why there’s a bigger continuous write cost and why it isn’t recommended much today due to RAID 6 (not available in consumer motherboards) solves this issue and pretty much replaces RAID 5 when it comes to servers and enterprise level systems.

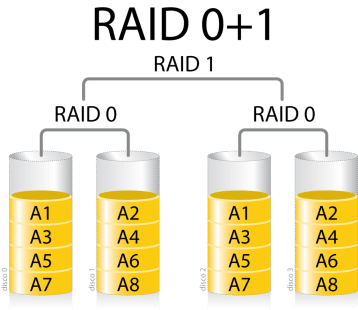

Hybrid RAID (0+1 & 1+0) is, in essence, a RAID array of two other RAID arrays. We’re going to focus on RAID 0 and RAID 1. On one hand we have the speed and appeal (specially using SSDs) that RAID 0 offers us but we’ll also always have the fear that if one of the drives within the RAID 0 array dies, we’ll lose the data and all of them. On the other hand we have the peace of mind and tranquility that RAID 1 offers us but the annoyance of not gaining speed and “loosing” storage space as half of the space is being used for, what in essence is, an insta-backup. From this, we get the brilliant (yes, brilliant, there’s no other way of describing it) Hybrid RAID that allows us to have in one single array the advantages of both and only some of the disadvantages, not all of them. So, within this type of RAID there’s 2.

RAID 0+1 creates two RAID 0 arrays (speed and capacity with no redundancy) and binds them in a RAID 1 array which gives us redundancy in a system that normally wouldn’t have it.

This system can sustain one or more drive failures in one of the 2 RAID 0 systems that compose our RAID 0+1 system, even all of the drives within one of the two RAID 0 systems but if we lose drives in both RAID 0 arrays (regardless of quantity) we will lose all our data. So, we would have to lose a drive in each RAID 0 array at the same time which while unlikely, it’s not impossible and due to this risk, this system is no longer used much at an enterprise level.

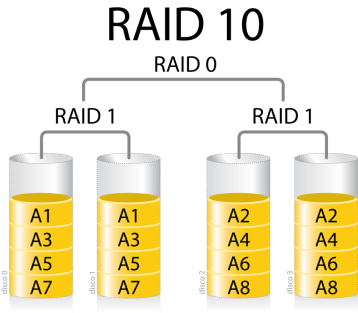

RAID 1+0 (or simply, RAID 10), is, as far as I’m concerned, the ideal everyday consumer solution due to the fact that it offers us something similar to RAID 0+1 but with less disadvantages. RAID 1+0 creates a RAID 0 array out of two RAID 1 arrays. As with RAID 0+1 we obtain an increase in speed but somewhat less due to the fact that in essence, we’re only obtaining the speed of 2 drives put together (remember each RAID 1 is the speed of the slowest drive within its array) following the formula specified in the description of RAID 0. That said, we can lose drives from both RAID 1 arrays as long as we don’t lose all the drives from each array. You could argue that this has still the same chance of complete failure, after all, we have the same unlikely chance of loosing both drives in a RAID 1 array within a RAID 1+0 array than loosing one disk on each array within the RAID 0+1 which is why there’s an ideal setup to prevent this.

The ideal setup would be for each RAID 1 array within the RAID 1+0 to contain 4 drives, giving us the capacity of 4 drives overall with the speed of 2 drives in RAID 0 (following the formula in the RAID 0 explanation) with the peace of mind that it is incredibly unlikely that all 4 drives within of the RAID 1 arrays would die at the very same time. (if 2 and 2 drives, or 3 and 1 drives die, we could continue working as if nothing happened).

An ideal example of personal use would be 8x 256Gb SSDs in RAID 1+0 giving us 1000Gb of usable storage, an average of 1Gb/s read-write rate with the peace of mind that we’d have to be the most unlucky person in the world in order to lose our data due to drive failure.

What methods can I use to obtain RAID?

RAID levels aside, there’s 3 ways of obtaining and using RAID. In some cases we will have more than one of these at our disposal but each way is considerably different to the rest but I’ve organized them from less desirable to most desirable so this way you’ll know which to go for if you have more than one available.

Software RAID

This way of acquiring RAID does not require any specific hardware and can be done on any computer that has 2 or more drives (even though IDE). The only thing we need is Windows 7 or newer or certain Linux distros. The obvious advantage of this is that we can set up RAID on any system without worrying about compatibility and we can use drives of any connection type, size or speed. The disadvantage however is a considerable one, specially on low powered computers due to the fact that Software RAID uses a considerable amount of processor and ram resources. We also do not have the option to easily swap out one drive for another (on Hardware and Firmware RAID we can easily do so without any issue, we just swap the drive or drives, synchronise and done) and due to this it is not advisable to use Software RAID on the drive that our operating system lives on due to the fact that if our RAID array fails we will not be able to boot to WIndows or Linux and fix this issue. So, software RAID should only be used for storage purposes.

Firmware RAID

This is the most common type of acquiring RAID today due to the fact that Firmware RAID refers to the integrated systems that come with consumer grade motherboards which means that for most readers, assuming their motherboard is compatible (which you’ll find out about on our next article on the matter) this is the way to go. The main difference between this and Hardware RAID is that we continue to depend on resources from our processor and ram but, the fact is that considering the motherboards that contain this technology plus the power of modern-day processors and ram mean that we will not notice the loss of resources we will have by using this type of RAID.

Hardware RAID

There’s a strong chance you will never use or even see a Hardware RAID system. These depend on a PCI/PCI-E card that have their own processor and ram which means it does not require to use any of our resources which is great, on the hand, the main issue with Hardware RAID is that if the card dies (which granted is unlikely for many years as these things are seriously built to last) and is a discontinued model (again, it would have to be very old for this to happen) you will have an issue due to the fact you will need the very same model in order to use the RAID system you had when your card died and therefore get access to your data.

That said, not all PCI/PCI-E RAID cards fall within the Hardware RAID category as many of the cheaper models do not have their own processor and/or ram so these fall within the Firmware RAID category. Unfortunately, the ones that do fit within the Hardware RAID category are incredibly expensive and due to this tend to be used almost exclusively in the world of servers and enterprise systems that require maximum security and efficiency.